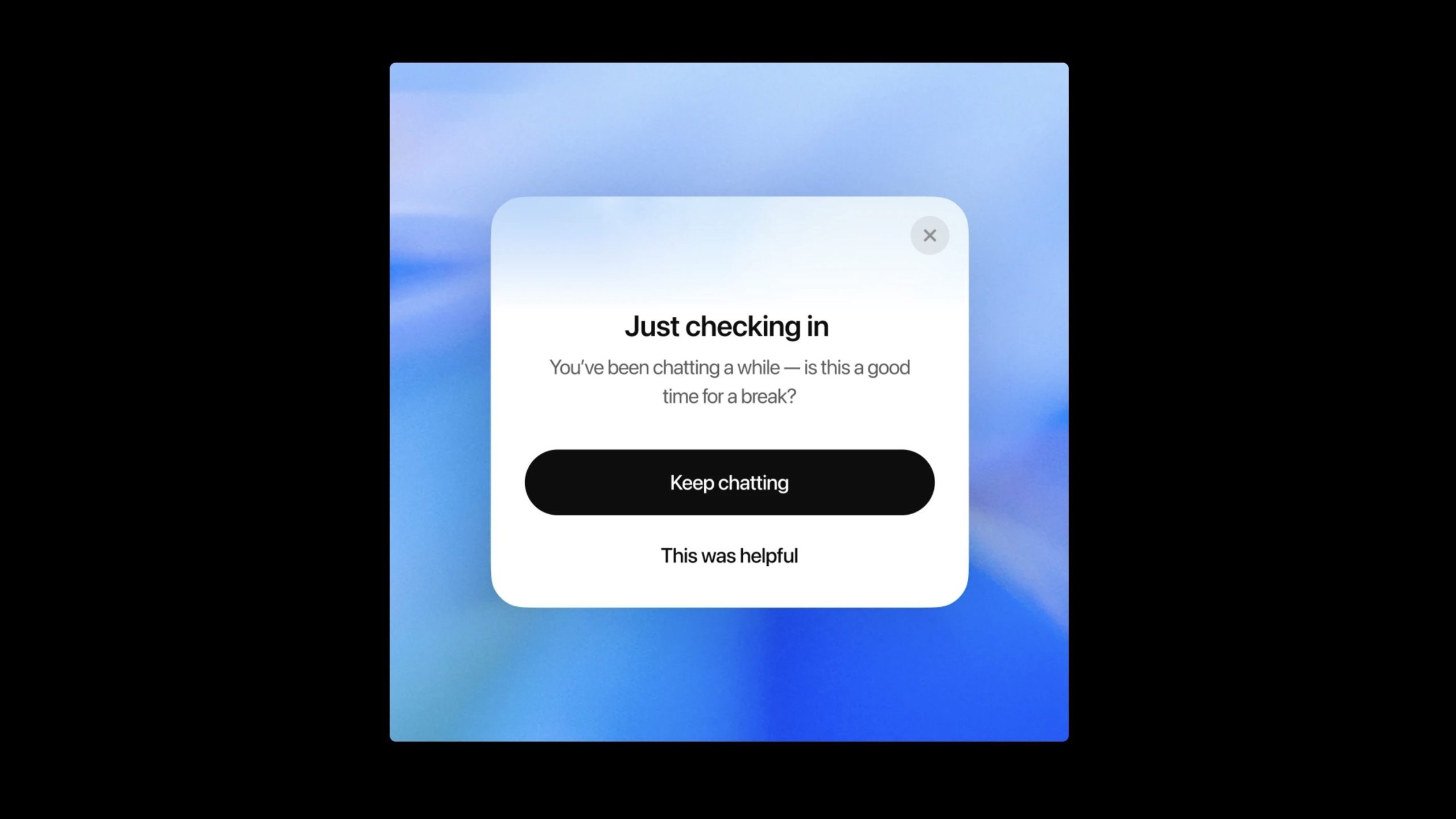

If your daily routine involves frequently chatting with ChatGPT, you might be surprised to see a new pop-up this week. After a lengthy conversation, you might be presented with a “Just checking in” window, with a message reading: “You’ve been chatting a while—is this a good time for a break?”

The pop-up gives you the option to “Keep chatting,” or to even select “This was helpful.” Depending on your outlook, you might see this as a good reminder to put down the app for a while, or a condescending note that implies you don’t know how to limit your own time with a chatbot.

Don’t take it personally—OpenAI might seem like they care about your usage habits with this pop-up, but the real reason behind the change is a bit darker than that.

Addicted to ChatGPT

This new usage reminder was part of a greater announcement from OpenAI on Monday, titled “What we’re optimizing ChatGPT for.” In the post, the company says that it values how you use ChatGPT, and that while the company wants you to use the service, it also sees a benefit to using the service less. Part of that is through features like ChatGPT Agent, which can take actions on your behalf, but also through making the time you do spend with ChatGPT more effective and efficient.

That’s all well and good: If OpenAI wants to work to make ChatGPT conversations as useful for users in a fraction of the time, so be it. But this isn’t simply coming from a desire to have users speedrun their interactions with ChatGPT; rather, it’s a direct response to how addicting ChatGPT can be, especially for people who rely on the chatbot for mental or emotional support.

You don’t need to read between the lines on this one, either. To OpenAI’s credit, the company directly addresses serious issues some of its users have experienced with the chatbot as of late, including an update earlier this year that made ChatGPT way too agreeable. Chatbots tend to be enthusiastic and friendly, but the update to the 4o model took it too far. ChatGPT would confirm that all of your ideas—good, bad, or horrible—were valid. In the worst cases, the bot ignored signs of delusion, and directly fed into those users’ warped perspective.

OpenAI directly acknowledges this happened, though the company believes these instances were “rare.” Still, they are directly attacking the problem: In addition to these reminders to take a break from ChatGPT, the company says it’s improving models to look out for signs of distress, as well as stay away from answering complex or difficult problems, like “Should I break up with my partner?” OpenAI says it’s even collaborating with experts, clinicians, and medical professionals in a variety of ways to get this done.

We should all use AI a bit less

It’s definitely a good thing that OpenAI wants you using ChatGPT less, and that they’re actively acknowledging its issues and working on addressing them. But I don’t think it’s enough to rely on OpenAI here. What’s in the best interest for the company is not always going to be what’s in your best interest. And, in my opinion, we could all benefit from stepping back from generative AI.

As more and more people turn to chatbots for support with work, relationships, or their mental health, it’s important to remember these tools are not perfect, or even totally understood. As we saw with GPT-4o, AI models can be flawed, and decide to start encouraging dangerous ways to thinking. AI models can also hallucinate, or, in other words, make things up entirely. You might think the information your chatbot is providing you is 100% accurate, but it may be riddled with errors or outright falsehoods—how often are you fact-checking your conversations?

Trusting AI with your private and personal thoughts also poses a privacy risk, as companies like OpenAI store your chats, and come with none of the legal protections a licensed medical professional or legal representative do. Adding on to that, emerging studies suggest that the more we rely on AI, the less we rely on our own critical thinking skills. While it may be too hyperbolic to say that AI is making us “dumber,” I’d be concerned by the amount of mental power we’re outsourcing to these new bots.

Chatbots aren’t licensed therapists; they tends to make things up; they have few privacy protections; and they may even encourage delusion thinking. It’s great that OpenAI wants you using ChatGPT less, but we might want to use these tools even less than that.

Disclosure: Lifehacker’s parent company, Ziff Davis, filed a lawsuit against OpenAI in April, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.