Did you know you can customize Google to filter out garbage? Take these steps for better search results, including adding my work at Lifehacker as a preferred source.

You should never assume what you say to a chatbot is private. When you interact with one of these tools, the company behind it likely scrapes the data from the session, often using it to train the underlying AI models. Unless you explicitly opt out of this practice, you’ve probably unwittingly trained many models in your time using AI.

Anthropic, the company behind Claude, has taken a different approach. The company’s privacy policy has stated that Anthropic does not collect user inputs or outputs to train Claude, unless you either report the material to the company, or opt in to training. While that doesn’t mean Anthropic was abstaining from collecting data in general, you could rest easy knowing your conversations weren’t feeding future versions of Claude.

That’s now changing. As reported by The Verge, Anthropic will now start training its AI models, Claude, on user data. That means new chats or coding sessions you engage with Claude on will be fed to Anthropic to adjust and improve the models’ performances.

This will not affect past sessions if you leave them be. However, if you re-engage with a past chat or coding sessions following the change, Anthropic will scrape any new data generated from the session for its training purposes.

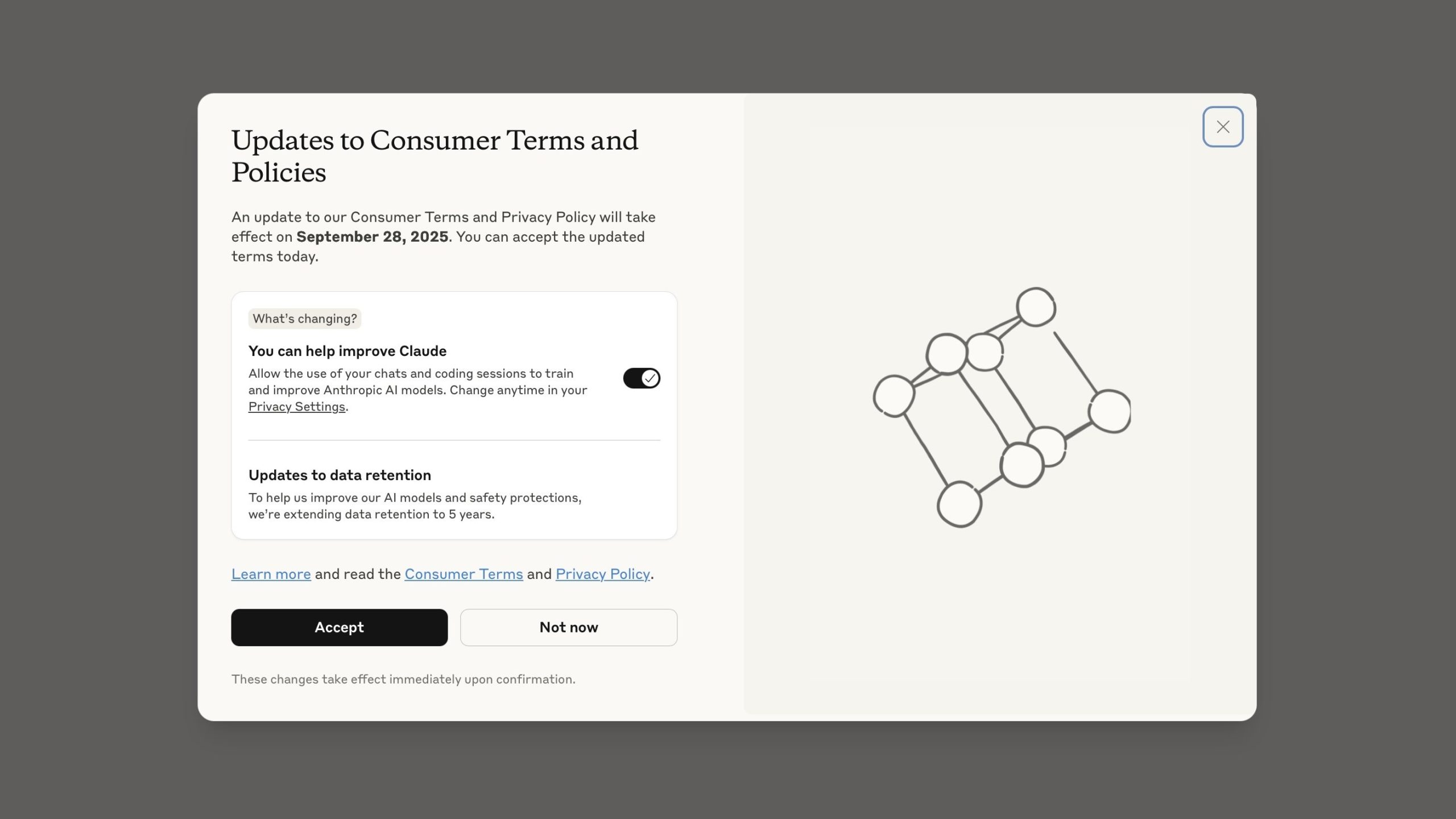

This won’t just happen without your permission—at least, not right away. Anthropic is giving users until Sept. 28 to make a decision. New users will see the option when they set up their accounts, while existing users will see a permission popup when they login. However, it’s reasonable to think that some of us will be clicking through these menus and popups too quickly, and accidentally agree to data collection that we might not otherwise mean to.

To Anthropic’s credit, the company says it does try to hide sensitive user data through “a combination of tools and automated processes,” and that it does not sell your data to third parties. Still, I certainly don’t want my conversations with AI to train future models. If you feel the same, here’s how to opt out.

How to opt out of Anthropic AI training

If you’re an existing Claude user, you’ll see a popup warning the next time you log into your account. This popup, titled “Updates to Consumer Terms and Policies,” explains the new rules, and, by default, opts you into the training. To opt out, make sure the toggle next to “You can help improve Claude” is turned off. (The toggle will be set to the left with an (X), rather than to the right with a checkmark.) Hit “Accept” to lock in your choice.

If you’ve already accepted this popup and aren’t sure if you opted in to this data collection, you can still opt out. To check, open Claude and head to Settings > Privacy > Privacy Settings, then make sure the “Help improve Claude” toggle is turned off. Note that this setting will not undo any data that Anthropic has collected since you opted in.